So what is CPU cache exactly? It’s basically super-fast memory that lives right next to your processor. Think of cache memory in CPU like having a personal assistant who anticipates what you need and keeps it ready before you even ask.

When your CPU requires data, it prioritizes efficiency by first checking its cache levels rather than directly accessing the slower RAM. If the data is found in the cache, it’s called a cache hit, ensuring smooth and rapid processing. However, if the data is not present in the cache, a cache miss occurs, necessitating a longer retrieval process from the main memory and consequently slowing down operations.

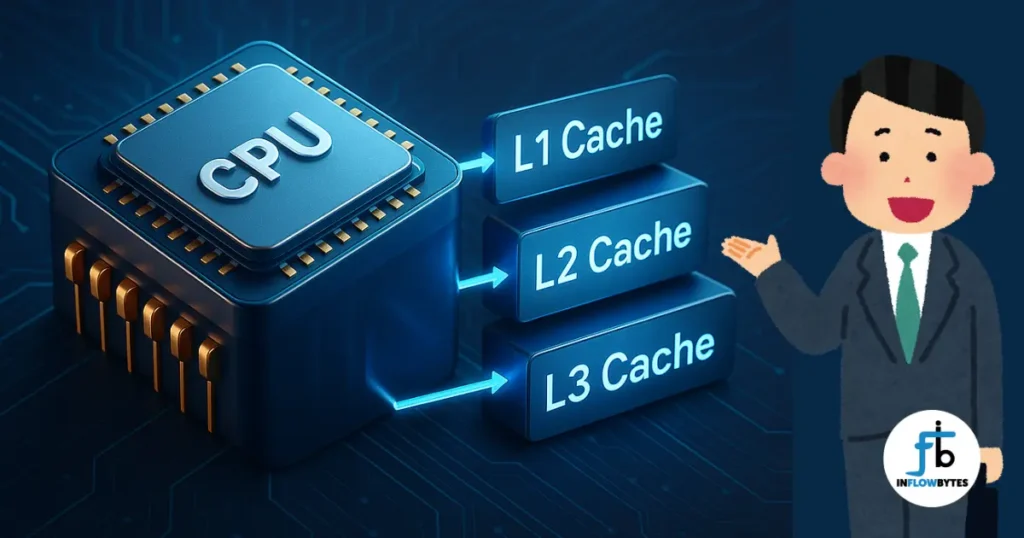

Understanding CPU Cache Levels: L1 vs L2 vs L3 Cache

Now let’s break down these CPU cache levels – it’s actually pretty straightforward once you get the hang of it.

L1 Cache: The Speed Demon

L1 cache is like having your most essential tools right in your pocket. It’s the smallest cache (usually 32KB to 128KB per core) but also the fastest. Your CPU can grab data from L1 cache in just 1-2 clock cycles – that’s lightning fast. It’s actually split into two parts: one for instructions (L1I) and one for data (L1D), which helps keep things organized.

More to know:

“Sd cards (memory cards) also have cache in their storage.”

L2 Cache: The Sweet Spot

The L2 cache is a vital component in your computer’s memory hierarchy, acting as a crucial intermediary between the lightning-fast L1 cache and the slower main RAM. While it’s not as quick as L1, typically requiring 3-10 clock cycles for access, it’s significantly larger, often ranging from 256KB to 1MB per processor core. This increased size is where its importance truly shines, especially when you’re running demanding applications. When the smaller L1 cache becomes full, the L2 cache steps in as a critical backup, providing a much faster alternative to accessing RAM directly. When your system’s L2 cache is working efficiently, you’ll definitely notice a smoother, more responsive experience.

L3 Cache: The Team Player

L3 cache is the largest of the bunch (8MB to 128MB in modern CPUs) and it’s shared between all your CPU cores. Yeah, it’s slower than L1 and L2 (15-50 clock cycles), but it plays a crucial role in multi-core cache coherency. Think of it as the communal storage area where all your CPU cores can share information efficiently.

Cache Impact on CPU Performance

How Cache Hit and Miss Actually Affects You

Here’s where cache impact on CPU performance gets really interesting. Let’s say your cache has a 95% hit rate – that means 95% of the time, your CPU finds what it needs without going to RAM. That remaining 5% that has to fetch from RAM? Those requests take 100-300 clock cycles compared to cache’s 1-50 cycles. You can see why a good cache hit rate is so important.

The Technical Stuff: Cache Associativity

Don’t worry, this isn’t as complicated as it sounds. Cache associativity is basically how your cache organizes data. Some caches use simple direct mapping (fast but not always efficient), while others use more flexible approaches. Most modern processors use something called “n-way set associative” designs – it’s like having multiple organized filing systems working together.

Inclusive vs Exclusive Cache Designs

This is where Intel and AMD sometimes differ. Inclusive cache designs keep copies of data at multiple cache levels – it’s like having backup copies of important files. Exclusive designs avoid duplication to save space. Intel usually goes inclusive, AMD often goes exclusive. Both approaches work well, just differently.

Real-World Cache Performance Examples

AMD’s revolutionary X3D processors demonstrate cache impact dramatically. The Ryzen 7 5800X3D features 96MB of L3 cache through 3D V-Cache technology, delivering 15-25% gaming performance improvements over standard variants. This massive cache reduces memory bottlenecks in games with large datasets.

Intel’s latest processors focus on cache efficiency rather than size, optimizing hit rates through intelligent prefetching and improved algorithms. The competition between Intel vs AMD cache performance continues driving innovation in both capacity and efficiency.

Cache Performance in Different Applications

CPU Cache in Gaming

Modern games benefit enormously from large caches, particularly L3 cache. Game engines frequently access textures, models, and physics data, making cache hit rates crucial for maintaining high frame rates. Processors with substantial L3 cache often outperform higher-clocked alternatives in gaming scenarios.

Cache in Multitasking and Productivity

Multitasking workloads stress cache systems differently than gaming. Multiple applications compete for cache space, leading to increased miss rates. Larger L2 and L3 caches help maintain performance when switching between applications, reducing the performance penalty of context switching.

Machine Learning and Real-Time Applications

Machine learning workloads with large datasets particularly benefit from substantial cache capacity. Training neural networks involves repetitive access to weight matrices and training data, making cache efficiency critical for performance. Real-time applications require predictable cache behavior to maintain consistent response times.

Does Cache Size Affect Performance?

Cache size directly impacts performance, but with diminishing returns. Doubling L1 cache from 32KB to 64KB provides noticeable improvements, while increasing L3 cache from 32MB to 64MB offers smaller gains. The relationship between cache size and performance depends heavily on workload characteristics and memory access patterns.

Application-specific optimization matters more than raw cache size. A processor with efficient cache algorithms and moderate capacity often outperforms competitors with larger but poorly optimized caches.

Cache Memory Optimization Strategies

Modern CPUs employ sophisticated prefetching algorithms to predict future memory access patterns and preload cache accordingly. These hardware prefetchers analyze memory access sequences and speculatively fetch data before explicit requests, improving effective hit rates.

Cache replacement policies determine which data gets evicted when cache fills up. Least Recently Used (LRU) algorithms work well for most applications, while specialized workloads might benefit from different strategies.

Frequently Asked Questions

How to Clear CPU Cache?

CPU cache clears automatically during normal operation and cannot be manually cleared like software caches. The hardware manages cache contents transparently, replacing old data with new requests as needed.

Is CPU Cache Important?

Absolutely. CPU cache represents one of the most critical performance factors in modern processors. Even processors with identical clock speeds can perform vastly differently based on cache architecture and capacity.

Which Cache Level is Fastest?

L1 cache is always the fastest, operating at CPU clock speeds with 1-2 cycle access times. Cache speed decreases as you move to L2 (3-10 cycles) and L3 (15-50 cycles), but capacity increases correspondingly.

How L1, L2, L3 Differ?

The primary differences lie in size, speed, and scope. L1 cache is smallest and fastest but per-core only. L2 cache balances size and speed, typically per-core but larger than L1. L3 cache is largest but slowest, shared across all cores for improved efficiency.

Does Cache Size Improve Performance?

Cache size improves performance up to a point, with diminishing returns as size increases. The optimal cache size depends on your specific applications and workflow. Gaming and content creation typically benefit from larger caches, while basic computing tasks see minimal improvement.

Conclusion: Choosing the Right CPU Cache Configuration

CPU cache fundamentally determines processor performance across all computing tasks. L1 cache provides immediate access to critical data, L2 cache bridges the gap between speed and capacity, while L3 cache enables efficient multi-core operation and reduces expensive RAM access.

When selecting a CPU, consider your primary use cases. Gamers benefit from processors with substantial L3 cache like AMD’s X3D variants. Content creators and multitaskers should prioritize balanced cache hierarchies with adequate L2 and L3 capacity. Basic users can focus on overall system balance rather than cache specifications alone.

The ongoing evolution of cache technology continues pushing performance boundaries. As applications become more demanding and datasets grow larger, cache efficiency becomes increasingly critical for maintaining responsive computing experiences. Understanding cache impact helps you make informed decisions about CPU selection and system optimization, ensuring your hardware investment delivers optimal performance for your specific computing needs.